Bridging cloud and local LLMs

I was talking with my classmate Amit Bhor a few days ago about the limitations of inference on the edge, especially around memory requirements of the new MOE models (Deepseek-R1 etc). At the same time, edge inference is pretty desirable for things like privacy, disconnected scenarios, cost etc.

Well, Minions attempts to tackle some of these challenges by adding a way for cloud models to cooperate with local models. The local models handle the interaction with data on your device, whereas the cloud models perform some of the heavy lifting/reasoning.

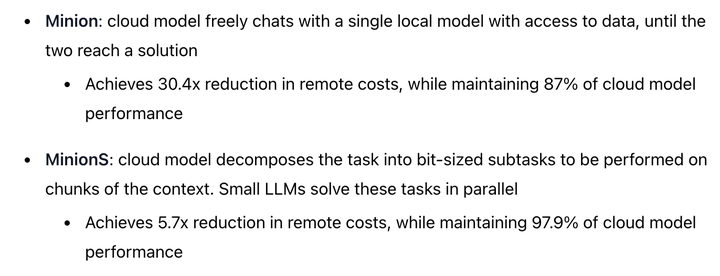

The claimed gains are impressive:

Pretty cool! Techniques like this would make a lot of sense for on-device assistants like Siri or a future smart-home-controller AI.